Difference between revisions of "Probability distributions"

| (196 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| − | In | + | In probability theory and statistics, a '''probability distribution''' is the mathematical function that gives the probabilities of occurrence of different possible '''outcomes''' for an experiment. In any random experiment there is always uncertainty as to whether a particular event will or will not occur. As a measure of the chance, or probability, with which we can expect the event to occur, it is convenient to assign a number between 0 and 1. <ref name="probz">Spiegel, M. R., Schiller, J. T., & Srinivasan, A. <i>Probability and Statistics : based on Schaum’s outline of Probability and Statistics</i>, published 2001 https://ci.nii.ac.jp/ncid/BA77714681</ref> |

| − | |||

==Introduction== | ==Introduction== | ||

| − | + | Probability is the science of uncertainty. It provides precise mathematical rules for understanding and analyzing our own ignorance. It does not tell us tomorrow’s weather or next week’s stock prices; rather, it gives us a framework for working with our limited knowledge and for making sensible decisions based on what we do and do not know.<ref name="probzz"> Evans, M. J., & Rosenthal, J. S.<i>Probability and Statistics: The Science of Uncertainty</i>, published 2009, WH Freeman. </ref> | |

| − | + | == Terminology == | |

| + | === Sample space === | ||

| + | In probability theory, the sample space refers to the set of all possible outcomes of a random experiment. It is denoted by the symbol Ω (capital omega).<ref name="probiiiz"> Casella, G., & Berger, R. L. <i> Statistical Inference. </i>, published 2021, Cengage Learning. </ref> | ||

| + | |||

| + | * Let's consider an example of rolling a fair six-sided die. The sample space in this case would be {1, 2, 3, 4, 5, 6}, as these are the possible outcomes of the experiment. Each number represents the face of the die that may appear when it is rolled. <ref name="probiiiz"> Casella, G., & Berger, R. L. <i> Statistical Inference. </i>, published 2021, Cengage Learning. </ref> | ||

| + | |||

| + | === Random variable === | ||

| + | Random variable takes values from a sample space. In contrast, probabilities describe which values and set of values are more likely to be taken out of the sample space. Random variable must be quantified, therefore, it assigns a numerical value to each possible outcome in the sample space. <ref name="probzz"> Evans, M. J., & Rosenthal, J. S.<i>Probability and Statistics: The Science of Uncertainty</i>, published 2009, WH Freeman. </ref> | ||

| + | |||

| + | * For example, if the sample space for flipping a coin is {heads, tails}, then we can assign a random variable Y such that Y = 1 when heads land and Y = 0 when tails land. However, we can assign any number for these variables. 0 and 1 are just more convenient. <ref name="probzz"> Evans, M. J., & Rosenthal, J. S.<i>Probability and Statistics: The Science of Uncertainty</i>, published 2009, WH Freeman. </ref> | ||

| + | |||

| + | * Because random variables are defined to be functions of the outcome s, and because the outcome s is assumed to be random (i.e., to take on different values with different probabilities), it follows that the value of a random variable will itself be random (as the name implies). | ||

| + | |||

| + | Specifically, if X is a random variable, then what is the probability that X will equal some particular value x? Well, X = x precisely when the outcome s is chosen such that X(s) = x. | ||

| + | |||

| + | * '''Exercise''' | ||

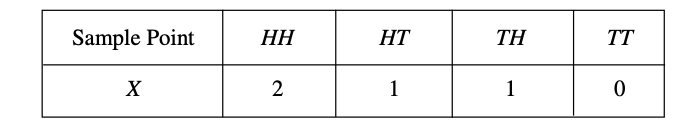

| + | ** Suppose that a coin is tossed twice so that the sample space is S = {HH, HT, TH, TT}. Let X represent the '''number of heads''' that can come up. With each sample point we can associate a number for X as shown in Table 1. Thus, for example, in the case of HH (i.e., 2 heads), X = 2 while for TH (1 head), X = 1. It follows that X is a random variable. <ref name="probzz"> Evans, M. J., & Rosenthal, J. S.<i>Probability and Statistics: The Science of Uncertainty</i>, published 2009, WH Freeman. </ref> | ||

| + | |||

| + | [[File:Tablespace.png|center|[[Probability distributions#Sample Space - sample|Table 1. Sample Space]]]] | ||

| + | |||

| + | === Expected value === | ||

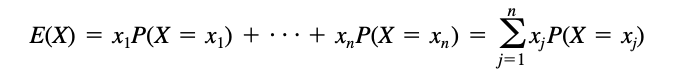

| + | A very important concept in probability is that of the '''expected value''' of a random variable. For a discrete random variable ''X'' having the possible values ''x1, c, xn,'' the expectation of ''X'' is defined as: <ref name="probzz"> Evans, M. J., & Rosenthal, J. S.<i>Probability and Statistics: The Science of Uncertainty</i>, published 2009, WH Freeman. </ref> | ||

| + | |||

| + | [[File:ex.png|center|]] | ||

| + | |||

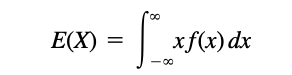

| + | For a continuous random variable ''X'' having density function ''f(x)'', the expectation of ''X'' is defined as | ||

| + | [[File:excont.png|center|]] | ||

| + | |||

| + | ==== Example ==== | ||

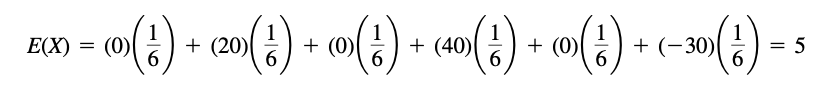

| + | Suppose that a game is to be played with a single die assumed fair. In this game a player wins $20 if a 2 turns up, $40 if a 4 turns up; loses $30 if a 6 turns up; while the player neither wins nor loses if any other face turns up. Find the expected sum of money to be won. | ||

| + | |||

| + | [[File:exex.png|center|]] | ||

| + | |||

| + | === Variance and standard deviation === | ||

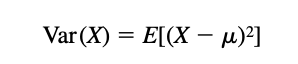

| + | Another important quantity in probability is called the '''variance''' defined by: | ||

| + | [[File:var.png|center|]] | ||

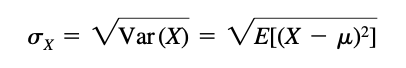

| + | The variance is a nonnegative number. The positive square root of the variance is called the '''standard deviation''' | ||

| + | and is given by | ||

| + | [[File:standdev.png|center|]] | ||

| + | |||

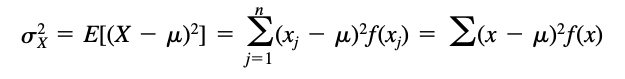

| + | *If ''X'' is a '''discrete''' random variable taking the values ''x1, x2, . . . , xn'' and having probability function ''f(x)'', then the variance is given by | ||

| + | [[File:vardisc.png|center|]] | ||

| + | |||

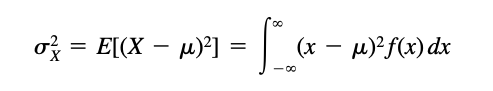

| + | *If ''X'' is a '''continuous''' random variable having density function ''f(x)'', then the variance is given by | ||

| + | [[File:varcont.png|center|]] | ||

| + | |||

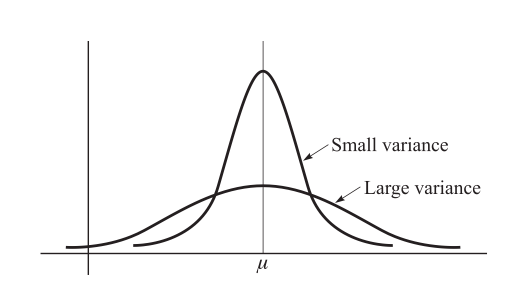

| + | *'''Graphical representation''' of variance for 2 continuous distribution with the same mean ''μ'' can be seen in a graph bellow | ||

| + | [[File:vargraph.png|center|]] | ||

| + | |||

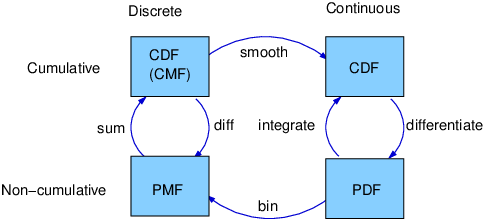

| + | == PMF vs. PDF vs. CDF == | ||

| + | In probability theory there are 3 functions that might be little confusing for some people. Let's make the differences clear. | ||

| + | [[File:pdf.png|center]] | ||

| + | |||

| + | ==== Probability mass function (PMF) ==== | ||

| + | *The probability mass function, denoted as ''P(X = x)'', is used for discrete random variables. It assigns probabilities to each possible value that the random variable can take. The PMF gives the probability that the random variable equals a specific value. | ||

| + | |||

| + | ==== Cumulative distribution function (CDF) ==== | ||

| + | * The cumulative distribution function, denoted as ''F(x)'', describes the probability that a random variable takes on a value less than or equal to a given value ''x''. It gives the cumulative probability up to a specific point. | ||

| + | * Since the PDF is the derivative of the CDF, '''the CDF can be obtained from PDF by integration''' | ||

| + | |||

| + | ==== Probability density function (PDF) ==== | ||

| + | To determine the distribution of a '''discrete''' random variable we can either provide its '''PMF''' or '''CDF'''. For '''continuous''' random variables, the CDF is well-defined so we can provide the '''CDF'''. However, the PMF does '''not''' work for continuous random variables, because for a continuous random variable ''P(X=x)=0'' for all x ∈ ℝ. | ||

| + | *Instead, we can usually define the probability density function (PDF). The '''PDF''' is the density of probability rather than the probability mass. The concept is very similar to mass density in physics: its unit is probability per unit length. <ref name="probz">Spiegel, M. R., Schiller, J. T., & Srinivasan, A. <i>Probability and Statistics : based on Schaum’s outline of Probability and Statistics</i>, published 2001 https://ci.nii.ac.jp/ncid/BA77714681</ref> | ||

| + | |||

| + | * The probability density function (PDF) is a function used to describe the probability distribution of a continuous random variable. Unlike discrete random variables, which have a countable set of possible values, continuous random variables can take on any value within a specified range. <ref name="probz">Spiegel, M. R., Schiller, J. T., & Srinivasan, A. <i>Probability and Statistics : based on Schaum’s outline of Probability and Statistics</i>, published 2001 https://ci.nii.ac.jp/ncid/BA77714681</ref> | ||

| − | + | * The PDF, denoted as ''f(x)'', represents the density of the probability distribution of a continuous random variable at a given point ''x''. It provides information about the likelihood of the random variable taking on a specific value or falling within a specific range of values. | |

| − | < | + | * Since the '''PDF is the derivative of the CDF''', the CDF can be obtained from PDF by integration <ref name="probz">Spiegel, M. R., Schiller, J. T., & Srinivasan, A. <i>Probability and Statistics : based on Schaum’s outline of Probability and Statistics</i>, published 2001 https://ci.nii.ac.jp/ncid/BA77714681</ref> |

| − | + | [[File:statszz.png|center|]] | |

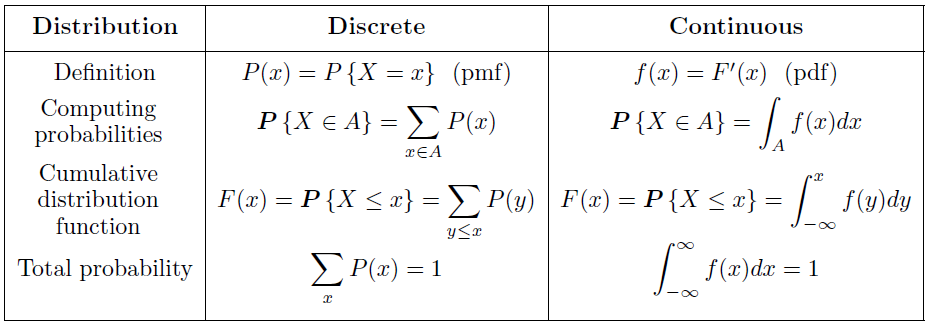

| − | + | == Distribution Functions for Random Variables == | |

| − | + | The distribution function provides important information about the probabilities associated with different values of a random variable. It can be used to calculate probabilities for specific events or to obtain other statistical properties of the random variable. <ref name="probz">Spiegel, M. R., Schiller, J. T., & Srinivasan, A. <i>Probability and Statistics : based on Schaum’s outline of Probability and Statistics</i>, published 2001 https://ci.nii.ac.jp/ncid/BA77714681</ref> | |

| − | + | *It gives the probability that the random variable takes on a value less than or equal to a given value. | |

| + | The distribution function of a random variable X, denoted as '''F(x)''', is defined as: <ref name="probz">Spiegel, M. R., Schiller, J. T., & Srinivasan, A. <i>Probability and Statistics : based on Schaum’s outline of Probability and Statistics</i>, published 2001 https://ci.nii.ac.jp/ncid/BA77714681</ref> | ||

| − | + | *F(x) = P(X ≤ x) | |

| − | + | where x is any real number, and P(X ≤ x) is the probability that the random variable X is less than or equal to x. <ref name="probz">Spiegel, M. R., Schiller, J. T., & Srinivasan, A. <i>Probability and Statistics : based on Schaum’s outline of Probability and Statistics</i>, published 2001 https://ci.nii.ac.jp/ncid/BA77714681</ref> | |

| − | + | === Distribution Functions for Discrete Random Variables === | |

| − | + | If X takes on only a finite number of values ''x1, x2, . . . , xn,'' then the distribution function is given by <br> | |

| − | + | [[File:discreteF.png|thumb|center|[[Probability distributions#Uniform distribution - discrete|Distribution function of a discrete variable]]]] | |

| − | # | ||

| − | |||

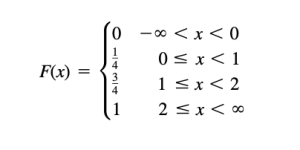

| − | + | ==== Example ==== | |

| + | The following function: [[File:discreteEx.png|thumb|center]] | ||

| + | Can be graphed as follows: [[File:Grafdiscrete.png|center]] | ||

| − | + | #The magnitudes of the jumps are 1/4, 1/2, 1/4 which are precisely the probabilities from the function. This fact enables one to obtain the probability function from the distribution function. | |

| + | # Because of the appearance of the graph it is often called a ''staircase function'' or ''step function''. | ||

| + | #The value of the function at an integer is obtained from the higher step; thus the value at 1 is 4 and not 4. This is expressed mathematically by stating that the distribution function is continuous from the right at 0, 1, 2. 3. As we proceed from left to right (i.e. going upstairs), the distribution function either remains the same or increases, taking on values from 0 to 1. Because of this, it is said to be a monotonically increasing function. | ||

| − | + | === Distribution Functions for Continuous Variables === | |

| − | [[File: | + | * A nondiscrete random variable X is said to be absolutely continuous, or simply continuous, if its distribution function may be represented as |

| + | [[File:cont.png|center]] | ||

| − | + | * where the function ''f(x)'' has the properties | |

| + | [[File:contprop.png|center]] | ||

| + | |||

| + | * The graphical representation of a possible probability distribution function (PDF) ''f(x)'' and it's cumulative distribution function (CDF) ''F(x)'' is given by the graph bellow: | ||

| + | [[File:graphcont.png|center]] | ||

| − | + | == Special Probability Distributions == | |

| − | === | + | === The Uniform Distribution === |

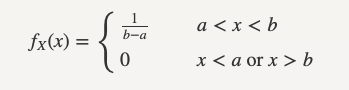

| − | + | A continuous random variable ''X'' is said to have a '''Uniform''' distribution over the interval [a,b], shown as ''X ∼ Uniform(a,b)'', if its PDF is given by <ref name="probiz"> Pishro-Nik, H. <i> Introduction to Probability, Statistics, and Random Processes</i>, published 2014, https://www.probabilitycourse.com/preface.php</ref> | |

| − | + | [[File:unifun.png|center]] | |

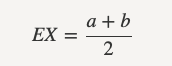

| − | + | The '''expected value''' is therefore | |

| − | + | [[File:uniex.png|center]] | |

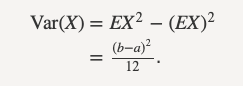

| − | + | and '''variance''' | |

| + | [[File:univar.png|center]] | ||

| − | === | + | ==== Example ==== |

| − | * | + | * When you flip a coin, the probability of the coin landing with a head faced up is equal to the probability that it lands with a tail faced up. |

| − | + | * When a fair die is rolled, the probability that the number appearing on the top of the die lies in between one to six follows a uniform distribution. The probability that any number will appear on the top of the die is equal to 1/6. | |

| − | * | ||

| − | |||

| − | |||

| − | === | + | === The Normal Distribution === |

| − | + | The '''normal distribution''' is by far the most important probability distribution. One of the main reasons for that is the Central Limit Theorem (CLT) | |

| − | *'' | + | * The notation for the random variable is written as ''X ∼ N(μ,σ)''. |

| + | * Also called the '''Gaussian''' distribution, the density function for this distribution is given by | ||

| + | [[File:normf.png|center]] | ||

| + | where μ and σ are the mean and standard deviation, respectively. | ||

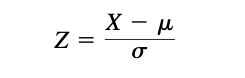

| − | + | * Let Z be the standardized variable corresponding to X | |

| − | * | + | [[File:normz.png|center]] |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | ==== Some Properties of the Normal Distribution ==== | |

| − | + | [[File:normprop.png|center]] | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | ==== Graphical representation ==== | |

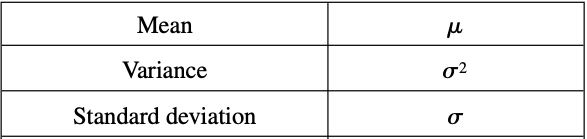

| + | A graph of the density function, sometimes called the '''standard normal curve'''. The areas within 1, 2, and 3 standard deviations of the mean are indicated. | ||

| + | [[File:normgrafh.png|center]] | ||

| − | + | ==== Central Limit Theorem (CLT) ==== | |

| + | The central limit theorem (CLT) is one of '''the most important results in probability theory'''. It tells us that, under certain conditions, the sum of a large number of random variables is approximately normal. | ||

| − | + | The central limit theorem states that if you have a population with mean μ and standard deviation σ and take sufficiently large random samples from the population with replacement, then the distribution of the sample means will be approximately normally distributed. This will hold true regardless of whether the source population is normal or skewed, provided the sample size is sufficiently large (usually '''n > 30'''). If the population is normal, then the theorem holds true even for samples smaller than 30. | |

| − | |||

| − | + | === The Binomial Distributions === | |

| − | + | * Suppose that we have an experiment such as tossing a coin repeatedly or choosing a marble from an urn repeatedly. | |

| − | + | * Each toss or selection is called a ''trial''. | |

| − | + | *In any single trial there will be a probability associated with a particular event such as head on the coin, 4 on the die, or selection of a red marble. In some cases this probability will not change from one trial to the next (as in tossing a coin or die). | |

| + | * Such trials are then said to be independent and are often called Bernoulli trials after James Bernoulli who investigated them at the end of the seventeenth century. | ||

| + | * If ''n'' is large and if neither ''p'' nor ''q'' is too close to zero, the binomial distribution can be closely approximated by a normal distribution. | ||

| − | |||

| − | |||

| − | |||

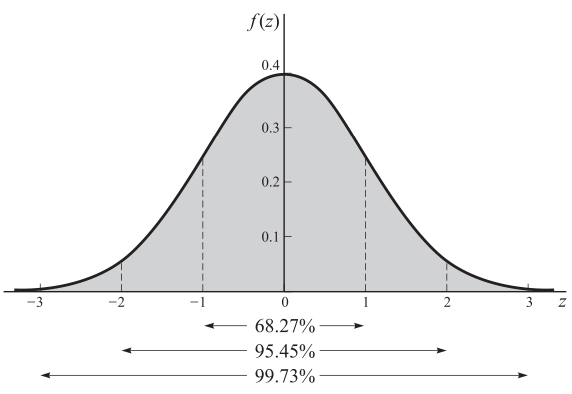

| − | + | Let ''p'' be the probability that an event will happen in any single Bernoulli trial (called the probability of success). Then q 1 p is the probability that the event will fail to happen in any single trial (called the probability of failure). The probability that the event will happen exactly x times in n trials (i.e., successes and n x failures will occur) is given by the probability function | |

| + | [[File:binom.png|center]] | ||

| − | + | The key characteristics of a binomial distribution are as follows: | |

| + | # The trials are independent: The outcome of each trial does not depend on the outcome of any other trial. | ||

| + | # Each trial has two possible outcomes: success or failure. | ||

| + | # The probability of success remains constant across all trials, denoted as ''p''. | ||

| + | # The number of trials is fixed, denoted as ''n''. | ||

| − | + | '''Some Properties of Binomial Distribution''' | |

| − | + | [[File:binomprop.png|center]] | |

| − | |||

| − | |||

| − | The | + | ==== Example ==== |

| + | The probability of getting exactly 2 heads in 6 tosses of a fair coin is: | ||

| + | [[File:binomEx.png|center]] | ||

| − | === | + | === The Bernoulli Distribution === |

| − | + | *Bernoulli distributions arise anytime we have a response variable that takes only two possible values, and we label one of these outcomes as 1 and the other as 0. | |

| − | + | * For example, 1 could correspond to success and 0 to failure of some quality test applied to an item produced in a manufacturing process. | |

| + | * Alternatively, we could be randomly selecting an individual from a population and recording a 1 when the individual is female and a 0 if the individual is a male. In this case, θ is the proportion of females in the population. | ||

| + | *The binomial distribution is applicable to any situation involving ''n'' independent performances of a random system; for each performance, we are recording whether a particular event has occurred, called a ''success'', or has not occurred, called a ''failure''. | ||

| + | ==== Difference between the Binomial and Bernoulli Distribution ==== | ||

| + | *The binomial distribution is derived from multiple independent Bernoulli trials. It represents the number of successes in these trials. | ||

| + | *Each trial in the binomial distribution follows a Bernoulli distribution. | ||

| + | *The Bernoulli distribution models a single trial with two possible outcomes, while the binomial distribution models the number of successes in a fixed number of independent Bernoulli trials. The binomial distribution extends the concept of the Bernoulli distribution to multiple trials. | ||

| − | + | === Multinomial Distribution === | |

| − | + | Suppose that events ''A1, A2, . . . , Ak'' are mutually exclusive, and can occur with respective probabilities ''p1, p2, . . . , p'' where ''p1 + p2 + ... + pk = 1''. If ''X1 , X2 , . . . , Xk'' are the random variables respectively giving the number of times that ''A1 , A2 , . . . , A'' occur in a total of ''n'' trials, so that ''X1 + X2 + ... + X = n'', then | |

| + | [[File:multinomm.png|center]] | ||

| + | * It is a generalization of the Binomial distribution | ||

| − | === | + | ==== Example ==== |

| − | + | If a fair die is to be tossed 12 times, the probability of getting 1, 2, 3, 4, 5 and 6 points exactly twice | |

| + | each is | ||

| + | [[File:multinommex.png|center]] | ||

| − | + | === The Poisson Distributions === | |

| + | *Poisson distribution definition is used to model a discrete probability of an event where independent events are occurring in a fixed interval of time and have a known constant mean rate. | ||

| + | *In other words, Poisson distribution is used to estimate how many times an event is likely to occur within the given period of time. | ||

| + | * Poisson distribution has wide use in the fields of business as well as in biology. | ||

| − | + | The distribution function is given by | |

| + | [[File:poissonfun.png|center]] | ||

| + | where ''λ'' is the Poisson rate parameter that indicates the expected value of the average number of events in the fixed time interval. | ||

| − | + | ==== Some properties of Poisson distribution ==== | |

| + | [[File:poissonprop.png|center]] | ||

| − | + | ==== Binomial and Poisson aproximation ==== | |

| + | In the binomial distribution, if ''n'' is large while the probability ''p'' of occurrence of an event is close to zero, so that ''q = 1 - p'' is close to 1, the event is called a ''rare'' event. In practice we shall consider an event as rare if the number of trials is at least 50 (n>50) while ''np'' is less than 5. For such cases the binomial distribution is very closely approximated by the Poisson distribution with ''λ = np''. | ||

| + | '''Example''' | ||

| + | * Ten percent of the tools produced in a certain manufacturing process turn out to be defective. Find the probability that in a sample of 10 tools chosen at random, exactly 2 will be defective, by using '''(1)''' the binomial distribution, '''(2)''' the Poisson approximation to the binomial distribution. | ||

| − | + | # The probability of a defective tool is p 0.1. Let ''X'' denote the number of defective tools out of 10 chosen. Then, according to the binomial distribution | |

| + | [[File:poissonprii.png|center]] | ||

| + | # We have ''λ = np = (10)(0.1)'' = 1. Then, according to the Poisson distribution, | ||

| + | [[File:poissonpri.png|center]] | ||

| + | *In general, the approximation is good if ''p ≤ 0.1'' and ''np ≥ 5''. | ||

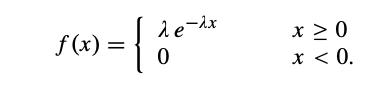

| − | + | === The Exponential Distribution === | |

| + | The exponential distribution is one of the widely used continuous distributions. It is often used to model the time elapsed between events. <ref name="probiz"> Pishro-Nik, H. <i> Introduction to Probability, Statistics, and Random Processes</i>, published 2014, https://www.probabilitycourse.com/preface.php</ref> | ||

| − | = | + | * It is defined by: <ref name="probzz"> Evans, M. J., & Rosenthal, J. S.<i>Probability and Statistics: The Science of Uncertainty</i>, published 2009, WH Freeman. </ref> |

| − | + | [[File:exponentialol.png|center]] | |

| − | |||

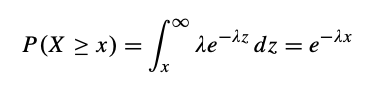

| − | + | * An exponential distribution can often be used to model lifelengths. For example, a certain type of light bulb produced by a manufacturer might follow an ''Exponential(λ)'' distribution for an appropriate choice of ''λ''. The lifelength ''X'' of a randomly selected light bulb from those produced by this manufacturer has probability of lasting longer than ''x'' units of time can be calculated by following:<ref name="probzz"> Evans, M. J., & Rosenthal, J. S.<i>Probability and Statistics: The Science of Uncertainty</i>, published 2009, WH Freeman. </ref> | |

| − | + | [[File:exponentialoll.png|center]] | |

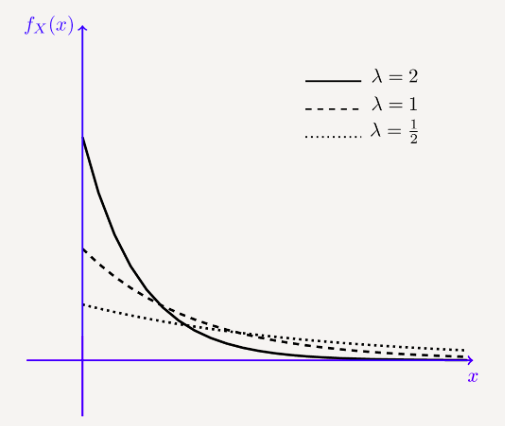

| − | + | * The graph of the ''Exponential(λ)'' distribution depends on the ''λ'' paramater: <ref name="probzz"> Evans, M. J., & Rosenthal, J. S.<i>Probability and Statistics: The Science of Uncertainty</i>, published 2009, WH Freeman. </ref> | |

| − | + | [[File:exponentiagraph.png|center]] | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | ==== Example ==== | |

| + | * Imagine you are at a store and are waiting for the next customer. In each millisecond, the probability that a new customer enters the store is very small. You can imagine that, in each millisecond, a coin (with a very small P(H) is tossed, and if it lands heads a new customers enters. If you toss a coin every millisecond, the time until a new customer arrives approximately follows an exponential distribution.<ref name="probiz"> Pishro-Nik, H. <i> Introduction to Probability, Statistics, and Random Processes</i>, published 2014, https://www.probabilitycourse.com/preface.php</ref> | ||

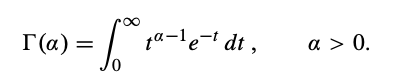

| − | + | === The Gamma Distribution === | |

| + | The gamma function is defined by | ||

| + | [[File:gammafun.png|center]] | ||

| + | where, | ||

| + | [[File:gammafunnz.png|center]] | ||

| − | = | + | * For the case α = 1 corresponds to the Exponential(λ) distribution: Gamma(1, λ) = Exponential(λ) <ref name="probzz"> Evans, M. J., & Rosenthal, J. S.<i>Probability and Statistics: The Science of Uncertainty</i>, published 2009, WH Freeman. </ref> |

| − | |||

| − | |||

| − | |||

| − | |||

| − | '' | + | ==== Example ==== |

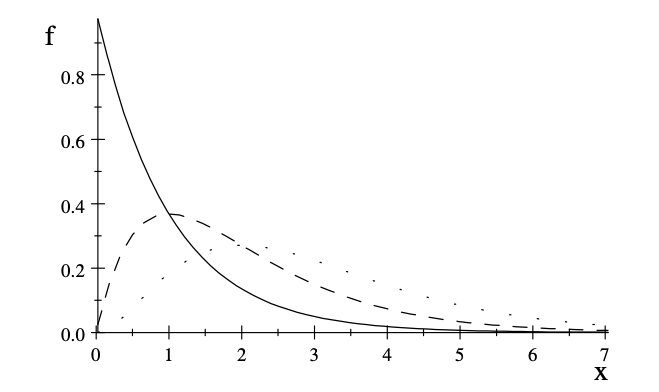

| + | In the following graph, we can see the ''Exponential distribution'' function (solid line) and the ''Gamma function'' (dotted line) plotted:<ref name="probzz"> Evans, M. J., & Rosenthal, J. S.<i>Probability and Statistics: The Science of Uncertainty</i>, published 2009, WH Freeman. </ref> | ||

| + | [[File:gammafunynz.png|center]] | ||

| − | + | == References == | |

| + | <references/> | ||

Latest revision as of 19:06, 1 June 2023

In probability theory and statistics, a probability distribution is the mathematical function that gives the probabilities of occurrence of different possible outcomes for an experiment. In any random experiment there is always uncertainty as to whether a particular event will or will not occur. As a measure of the chance, or probability, with which we can expect the event to occur, it is convenient to assign a number between 0 and 1. [1]

Contents

- 1 Introduction

- 2 Terminology

- 3 PMF vs. PDF vs. CDF

- 4 Distribution Functions for Random Variables

- 5 Special Probability Distributions

- 6 References

Introduction

Probability is the science of uncertainty. It provides precise mathematical rules for understanding and analyzing our own ignorance. It does not tell us tomorrow’s weather or next week’s stock prices; rather, it gives us a framework for working with our limited knowledge and for making sensible decisions based on what we do and do not know.[2]

Terminology

Sample space

In probability theory, the sample space refers to the set of all possible outcomes of a random experiment. It is denoted by the symbol Ω (capital omega).[3]

- Let's consider an example of rolling a fair six-sided die. The sample space in this case would be {1, 2, 3, 4, 5, 6}, as these are the possible outcomes of the experiment. Each number represents the face of the die that may appear when it is rolled. [3]

Random variable

Random variable takes values from a sample space. In contrast, probabilities describe which values and set of values are more likely to be taken out of the sample space. Random variable must be quantified, therefore, it assigns a numerical value to each possible outcome in the sample space. [2]

- For example, if the sample space for flipping a coin is {heads, tails}, then we can assign a random variable Y such that Y = 1 when heads land and Y = 0 when tails land. However, we can assign any number for these variables. 0 and 1 are just more convenient. [2]

- Because random variables are defined to be functions of the outcome s, and because the outcome s is assumed to be random (i.e., to take on different values with different probabilities), it follows that the value of a random variable will itself be random (as the name implies).

Specifically, if X is a random variable, then what is the probability that X will equal some particular value x? Well, X = x precisely when the outcome s is chosen such that X(s) = x.

- Exercise

- Suppose that a coin is tossed twice so that the sample space is S = {HH, HT, TH, TT}. Let X represent the number of heads that can come up. With each sample point we can associate a number for X as shown in Table 1. Thus, for example, in the case of HH (i.e., 2 heads), X = 2 while for TH (1 head), X = 1. It follows that X is a random variable. [2]

Expected value

A very important concept in probability is that of the expected value of a random variable. For a discrete random variable X having the possible values x1, c, xn, the expectation of X is defined as: [2]

For a continuous random variable X having density function f(x), the expectation of X is defined as

Example

Suppose that a game is to be played with a single die assumed fair. In this game a player wins $20 if a 2 turns up, $40 if a 4 turns up; loses $30 if a 6 turns up; while the player neither wins nor loses if any other face turns up. Find the expected sum of money to be won.

Variance and standard deviation

Another important quantity in probability is called the variance defined by:

The variance is a nonnegative number. The positive square root of the variance is called the standard deviation and is given by

- If X is a discrete random variable taking the values x1, x2, . . . , xn and having probability function f(x), then the variance is given by

- If X is a continuous random variable having density function f(x), then the variance is given by

- Graphical representation of variance for 2 continuous distribution with the same mean μ can be seen in a graph bellow

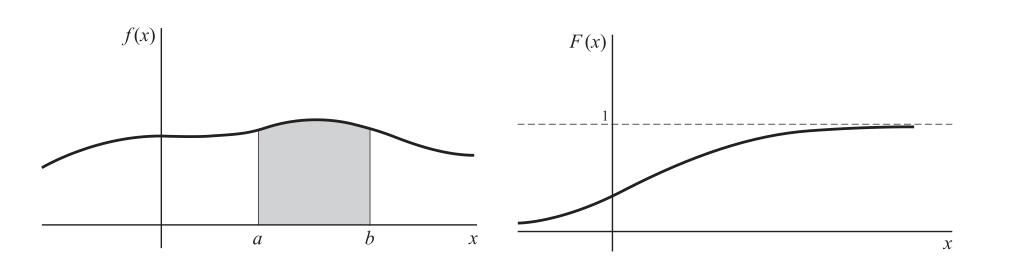

PMF vs. PDF vs. CDF

In probability theory there are 3 functions that might be little confusing for some people. Let's make the differences clear.

Probability mass function (PMF)

- The probability mass function, denoted as P(X = x), is used for discrete random variables. It assigns probabilities to each possible value that the random variable can take. The PMF gives the probability that the random variable equals a specific value.

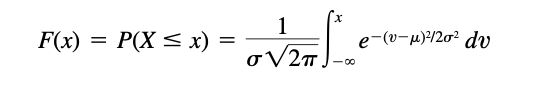

Cumulative distribution function (CDF)

- The cumulative distribution function, denoted as F(x), describes the probability that a random variable takes on a value less than or equal to a given value x. It gives the cumulative probability up to a specific point.

- Since the PDF is the derivative of the CDF, the CDF can be obtained from PDF by integration

Probability density function (PDF)

To determine the distribution of a discrete random variable we can either provide its PMF or CDF. For continuous random variables, the CDF is well-defined so we can provide the CDF. However, the PMF does not work for continuous random variables, because for a continuous random variable P(X=x)=0 for all x ∈ ℝ.

- Instead, we can usually define the probability density function (PDF). The PDF is the density of probability rather than the probability mass. The concept is very similar to mass density in physics: its unit is probability per unit length. [1]

- The probability density function (PDF) is a function used to describe the probability distribution of a continuous random variable. Unlike discrete random variables, which have a countable set of possible values, continuous random variables can take on any value within a specified range. [1]

- The PDF, denoted as f(x), represents the density of the probability distribution of a continuous random variable at a given point x. It provides information about the likelihood of the random variable taking on a specific value or falling within a specific range of values.

- Since the PDF is the derivative of the CDF, the CDF can be obtained from PDF by integration [1]

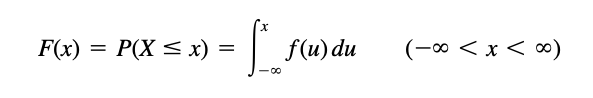

Distribution Functions for Random Variables

The distribution function provides important information about the probabilities associated with different values of a random variable. It can be used to calculate probabilities for specific events or to obtain other statistical properties of the random variable. [1]

- It gives the probability that the random variable takes on a value less than or equal to a given value.

The distribution function of a random variable X, denoted as F(x), is defined as: [1]

- F(x) = P(X ≤ x)

where x is any real number, and P(X ≤ x) is the probability that the random variable X is less than or equal to x. [1]

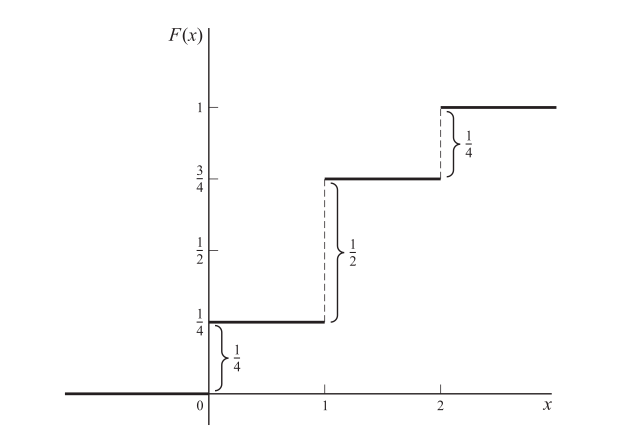

Distribution Functions for Discrete Random Variables

If X takes on only a finite number of values x1, x2, . . . , xn, then the distribution function is given by

Example

The following function:

Can be graphed as follows:

- The magnitudes of the jumps are 1/4, 1/2, 1/4 which are precisely the probabilities from the function. This fact enables one to obtain the probability function from the distribution function.

- Because of the appearance of the graph it is often called a staircase function or step function.

- The value of the function at an integer is obtained from the higher step; thus the value at 1 is 4 and not 4. This is expressed mathematically by stating that the distribution function is continuous from the right at 0, 1, 2. 3. As we proceed from left to right (i.e. going upstairs), the distribution function either remains the same or increases, taking on values from 0 to 1. Because of this, it is said to be a monotonically increasing function.

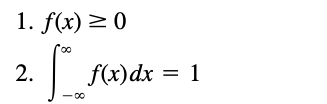

Distribution Functions for Continuous Variables

- A nondiscrete random variable X is said to be absolutely continuous, or simply continuous, if its distribution function may be represented as

- where the function f(x) has the properties

- The graphical representation of a possible probability distribution function (PDF) f(x) and it's cumulative distribution function (CDF) F(x) is given by the graph bellow:

Special Probability Distributions

The Uniform Distribution

A continuous random variable X is said to have a Uniform distribution over the interval [a,b], shown as X ∼ Uniform(a,b), if its PDF is given by [4]

The expected value is therefore

and variance

Example

- When you flip a coin, the probability of the coin landing with a head faced up is equal to the probability that it lands with a tail faced up.

- When a fair die is rolled, the probability that the number appearing on the top of the die lies in between one to six follows a uniform distribution. The probability that any number will appear on the top of the die is equal to 1/6.

The Normal Distribution

The normal distribution is by far the most important probability distribution. One of the main reasons for that is the Central Limit Theorem (CLT)

- The notation for the random variable is written as X ∼ N(μ,σ).

- Also called the Gaussian distribution, the density function for this distribution is given by

where μ and σ are the mean and standard deviation, respectively.

- Let Z be the standardized variable corresponding to X

Some Properties of the Normal Distribution

Graphical representation

A graph of the density function, sometimes called the standard normal curve. The areas within 1, 2, and 3 standard deviations of the mean are indicated.

Central Limit Theorem (CLT)

The central limit theorem (CLT) is one of the most important results in probability theory. It tells us that, under certain conditions, the sum of a large number of random variables is approximately normal.

The central limit theorem states that if you have a population with mean μ and standard deviation σ and take sufficiently large random samples from the population with replacement, then the distribution of the sample means will be approximately normally distributed. This will hold true regardless of whether the source population is normal or skewed, provided the sample size is sufficiently large (usually n > 30). If the population is normal, then the theorem holds true even for samples smaller than 30.

The Binomial Distributions

- Suppose that we have an experiment such as tossing a coin repeatedly or choosing a marble from an urn repeatedly.

- Each toss or selection is called a trial.

- In any single trial there will be a probability associated with a particular event such as head on the coin, 4 on the die, or selection of a red marble. In some cases this probability will not change from one trial to the next (as in tossing a coin or die).

- Such trials are then said to be independent and are often called Bernoulli trials after James Bernoulli who investigated them at the end of the seventeenth century.

- If n is large and if neither p nor q is too close to zero, the binomial distribution can be closely approximated by a normal distribution.

Let p be the probability that an event will happen in any single Bernoulli trial (called the probability of success). Then q 1 p is the probability that the event will fail to happen in any single trial (called the probability of failure). The probability that the event will happen exactly x times in n trials (i.e., successes and n x failures will occur) is given by the probability function

The key characteristics of a binomial distribution are as follows:

- The trials are independent: The outcome of each trial does not depend on the outcome of any other trial.

- Each trial has two possible outcomes: success or failure.

- The probability of success remains constant across all trials, denoted as p.

- The number of trials is fixed, denoted as n.

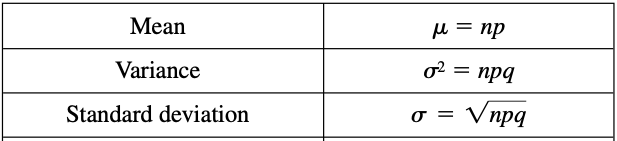

Some Properties of Binomial Distribution

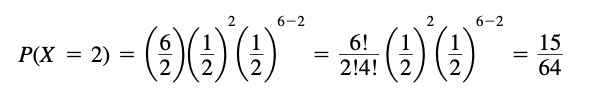

Example

The probability of getting exactly 2 heads in 6 tosses of a fair coin is:

The Bernoulli Distribution

- Bernoulli distributions arise anytime we have a response variable that takes only two possible values, and we label one of these outcomes as 1 and the other as 0.

- For example, 1 could correspond to success and 0 to failure of some quality test applied to an item produced in a manufacturing process.

- Alternatively, we could be randomly selecting an individual from a population and recording a 1 when the individual is female and a 0 if the individual is a male. In this case, θ is the proportion of females in the population.

- The binomial distribution is applicable to any situation involving n independent performances of a random system; for each performance, we are recording whether a particular event has occurred, called a success, or has not occurred, called a failure.

Difference between the Binomial and Bernoulli Distribution

- The binomial distribution is derived from multiple independent Bernoulli trials. It represents the number of successes in these trials.

- Each trial in the binomial distribution follows a Bernoulli distribution.

- The Bernoulli distribution models a single trial with two possible outcomes, while the binomial distribution models the number of successes in a fixed number of independent Bernoulli trials. The binomial distribution extends the concept of the Bernoulli distribution to multiple trials.

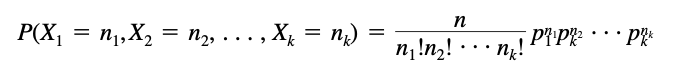

Multinomial Distribution

Suppose that events A1, A2, . . . , Ak are mutually exclusive, and can occur with respective probabilities p1, p2, . . . , p where p1 + p2 + ... + pk = 1. If X1 , X2 , . . . , Xk are the random variables respectively giving the number of times that A1 , A2 , . . . , A occur in a total of n trials, so that X1 + X2 + ... + X = n, then

- It is a generalization of the Binomial distribution

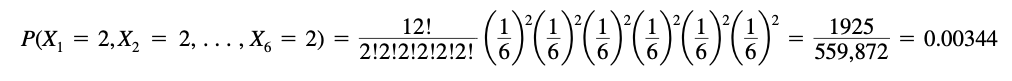

Example

If a fair die is to be tossed 12 times, the probability of getting 1, 2, 3, 4, 5 and 6 points exactly twice each is

The Poisson Distributions

- Poisson distribution definition is used to model a discrete probability of an event where independent events are occurring in a fixed interval of time and have a known constant mean rate.

- In other words, Poisson distribution is used to estimate how many times an event is likely to occur within the given period of time.

- Poisson distribution has wide use in the fields of business as well as in biology.

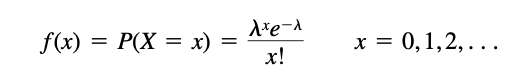

The distribution function is given by

where λ is the Poisson rate parameter that indicates the expected value of the average number of events in the fixed time interval.

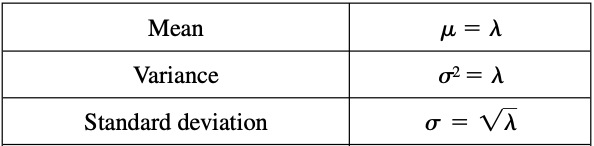

Some properties of Poisson distribution

Binomial and Poisson aproximation

In the binomial distribution, if n is large while the probability p of occurrence of an event is close to zero, so that q = 1 - p is close to 1, the event is called a rare event. In practice we shall consider an event as rare if the number of trials is at least 50 (n>50) while np is less than 5. For such cases the binomial distribution is very closely approximated by the Poisson distribution with λ = np. Example

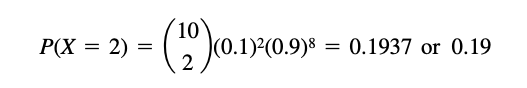

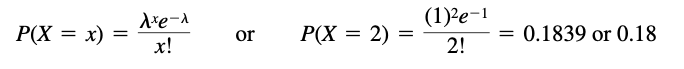

- Ten percent of the tools produced in a certain manufacturing process turn out to be defective. Find the probability that in a sample of 10 tools chosen at random, exactly 2 will be defective, by using (1) the binomial distribution, (2) the Poisson approximation to the binomial distribution.

- The probability of a defective tool is p 0.1. Let X denote the number of defective tools out of 10 chosen. Then, according to the binomial distribution

- We have λ = np = (10)(0.1) = 1. Then, according to the Poisson distribution,

- In general, the approximation is good if p ≤ 0.1 and np ≥ 5.

The Exponential Distribution

The exponential distribution is one of the widely used continuous distributions. It is often used to model the time elapsed between events. [4]

- It is defined by: [2]

- An exponential distribution can often be used to model lifelengths. For example, a certain type of light bulb produced by a manufacturer might follow an Exponential(λ) distribution for an appropriate choice of λ. The lifelength X of a randomly selected light bulb from those produced by this manufacturer has probability of lasting longer than x units of time can be calculated by following:[2]

- The graph of the Exponential(λ) distribution depends on the λ paramater: [2]

Example

- Imagine you are at a store and are waiting for the next customer. In each millisecond, the probability that a new customer enters the store is very small. You can imagine that, in each millisecond, a coin (with a very small P(H) is tossed, and if it lands heads a new customers enters. If you toss a coin every millisecond, the time until a new customer arrives approximately follows an exponential distribution.[4]

The Gamma Distribution

The gamma function is defined by

where,

- For the case α = 1 corresponds to the Exponential(λ) distribution: Gamma(1, λ) = Exponential(λ) [2]

Example

In the following graph, we can see the Exponential distribution function (solid line) and the Gamma function (dotted line) plotted:[2]

References

- ↑ 1.0 1.1 1.2 1.3 1.4 1.5 1.6 Spiegel, M. R., Schiller, J. T., & Srinivasan, A. Probability and Statistics : based on Schaum’s outline of Probability and Statistics, published 2001 https://ci.nii.ac.jp/ncid/BA77714681

- ↑ 2.0 2.1 2.2 2.3 2.4 2.5 2.6 2.7 2.8 2.9 Evans, M. J., & Rosenthal, J. S.Probability and Statistics: The Science of Uncertainty, published 2009, WH Freeman.

- ↑ 3.0 3.1 Casella, G., & Berger, R. L. Statistical Inference. , published 2021, Cengage Learning.

- ↑ 4.0 4.1 4.2 Pishro-Nik, H. Introduction to Probability, Statistics, and Random Processes, published 2014, https://www.probabilitycourse.com/preface.php